We learn a lot about objects by manipulating them: poking, pushing, prodding, and then seeing how they react.

We obviously can’t do that with videos — just try touching that cat video on your phone and see what happens. But is it crazy to think that we could take that video and simulate how the cat moves, without ever interacting with the real one?

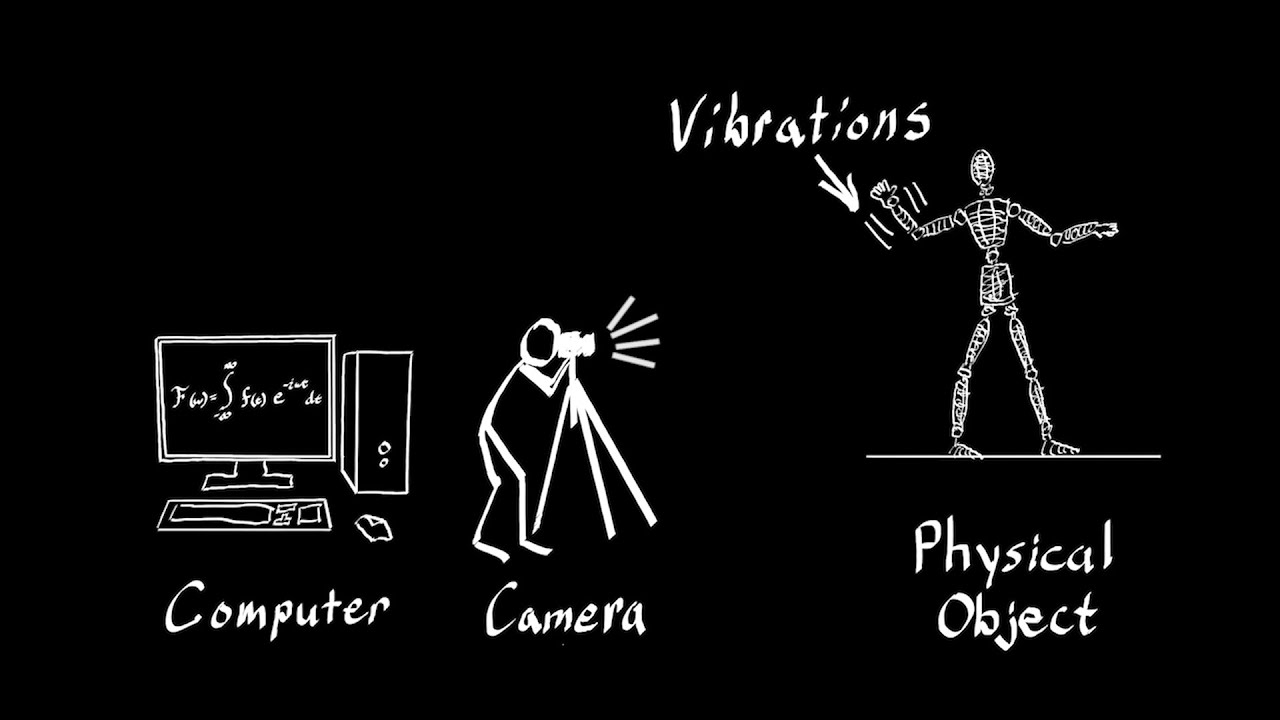

Researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have recently done just that, developing an imaging technique called Interactive Dynamic Video (IDV) that lets you reach in and “touch” objects in videos. Using traditional cameras and algorithms, IDV looks at the tiny, almost invisible vibrations of an object to create video simulations that users can virtually interact with.

"This technique lets us capture the physical behavior of objects, which gives us a way to play with them in virtual space,” says CSAIL PhD student Abe Davis, who will be publishing the work this month for his final dissertation. “By making videos interactive, we can predict how objects will respond to unknown forces and explore new ways to engage with videos.”

Davis says that IDV has many possible uses, from filmmakers producing new kinds of visual effects to architects determining if buildings are structurally sound. For example, he shows that, in contrast to how the popular Pokemon Go app can drop virtual characters into real-world environments, IDV can go a step beyond that by actually enabling virtual objects (including Pokemon) to interact with their environments in specific, realistic ways, like bouncing off the leaves of a nearby bush.

He outlined the technique in a paper he published earlier this year with PhD student Justin G. Chen and professor Fredo Durand.

How it works

The most common way to simulate objects’ motions is by building a 3-D model. Unfortunately, 3-D modeling is expensive, and can be almost impossible for many objects. While algorithms exist to track motions in video and magnify them, there aren’t ones that can reliably simulate objects in unknown environments. Davis’ work shows that even five seconds of video can have enough information to create realistic simulations.

To simulate the objects, the team analyzed video clips to find “vibration modes” at different frequencies that each represent distinct ways that an object can move. By identifying these modes’ shapes, the researchers can begin to predict how these objects will move in new situations.

“Computer graphics allows us to use 3-D models to build interactive simulations, but the techniques can be complicated,” says Doug James, a professor of computer science at Stanford University who was not involved in the research. “Davis and his colleagues have provided a simple and clever way to extract a useful dynamics model from very tiny vibrations in video, and shown how to use it to animate an image.”

Davis used IDV on videos of a variety of objects, including a bridge, a jungle gym, and a ukelele. With a few mouse-clicks, he showed that he can push and pull the image, bending and moving it in different directions. He even demonstrated how he can make his own hand appear to telekinetically control the leaves of a bush.

“If you want to model how an object behaves and responds to different forces, we show that you can observe the object respond to existing forces and assume that it will respond in a consistent way to new ones,” says Davis, who also found that the technique even works on some existing videos on YouTube.

Applications

Researchers say that the tool has many potential uses in engineering, entertainment, and more.

For example, in movies it can be difficult and expensive to get CGI characters to realistically interact with their real-world environments. Doing so requires filmmakers to use green-screens and create detailed models of virtual objects that can be synchronized with live performances.

But with IDV, a videographer could take video of an existing real-world environment and make some minor edits like masking, matting, and shading to achieve a similar effect in much less time — and at a fraction of the cost.

Engineers could also use the system to simulate how an old building or bridge would respond to strong winds or an earthquake.

“The ability to put real-world objects into virtual models is valuable for not just the obvious entertainment applications, but also for being able to test the stress in a safe virtual environment, in a way that doesn’t harm the real-world counterpart,” says Davis.

He says that he is also eager to see other applications emerge, from studying sports film to creating new forms of virtual reality.

“When you look at VR companies like Oculus, they are often simulating virtual objects in real spaces,” he says. “This sort of work turns that on its head, allowing us to see how far we can go in terms of capturing and manipulating real objects in virtual space.”

This work was supported by the National Science Foundation and the Qatar Computing Research Institute. Chen also received support from Shell Research through the MIT Energy Initiative.